- #PERMISSIONS FOR A S3 IMAGE BUCKET HOW TO#

- #PERMISSIONS FOR A S3 IMAGE BUCKET ARCHIVE#

- #PERMISSIONS FOR A S3 IMAGE BUCKET SOFTWARE#

- #PERMISSIONS FOR A S3 IMAGE BUCKET CODE#

- #PERMISSIONS FOR A S3 IMAGE BUCKET DOWNLOAD#

For this example, I used bogus access credentials and a referred to a bucket and key that (probably) doesn’t exist (certainly not one that I control). Important caveat: just because you can generate a presigned URL doesn’t mean the URL will be valid.

#PERMISSIONS FOR A S3 IMAGE BUCKET CODE#

If you run this code you’ll get a long URL that contains all of the information needed to upload the file: Url = s3_client.generate_presigned_url('put_object', params)

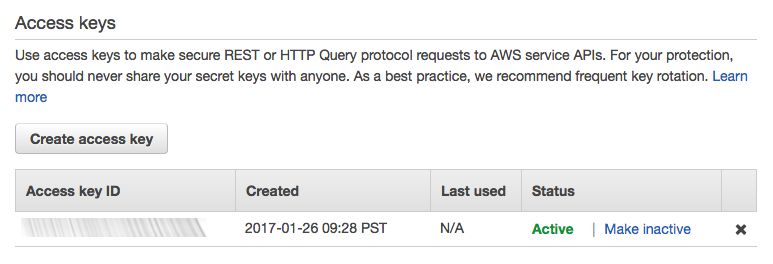

Note that you have to provide the expected content type: a URL signed for text/plain can’t be used to upload a file with type image/jpeg. Using the the S3 SDK, generating a presigned URL is easy: here’s some Python code (which might be run in a web-service Lambda) that will create a pre-signed URL for a PUT request. As an alternative, S3 allows you to generate a pre-signed URL, using the credentials of the application generating the URL. The actual signing process is rather complex, and requires access credentials (which you don’t want to provide to an arbitrary client, lest they be copied and used for nefarious purposes).

The one caveat to interacting with S3, assuming that you haven’t simply exposed your bucket to the world, is that these GETs and PUTs must be signed, using the credentials belonging to a user or role.

#PERMISSIONS FOR A S3 IMAGE BUCKET DOWNLOAD#

For S3, however, the web-service nature is closer to the surface: you can skip the SDK and download files with GET, just as when interacting with a website, or upload files with a PUT or POST.

#PERMISSIONS FOR A S3 IMAGE BUCKET SOFTWARE#

For many services you don’t think about that, and instead interact with the service via an Amazon-provided software development kit (SDK). Pre-signed URLsĪmazon Web Services are, in fact, web services: every operation is implemented as an HTTPS request. For this post I’m going to focus on two approaches: direct PUT using a presigned URL, and multi-part upload using the JavaScript SDK. How do files get uploaded?Īll of the examples that I’ve seen assume that a file magically arrives in S3 how it gets there is “left as an exercise for the reader.” However, this can be quite challenging for real-world applications, especially those running in a user’s browser. In many cases it’s a simple matter of moving the files back into the upload bucket to trigger reprocessing. By going to two buckets, you can eliminate the prefix (although the application might still use prefixes to separate files, for example by client ID).įailure recovery and bulk uploads are also easier when you separate new files from those that have been processed.

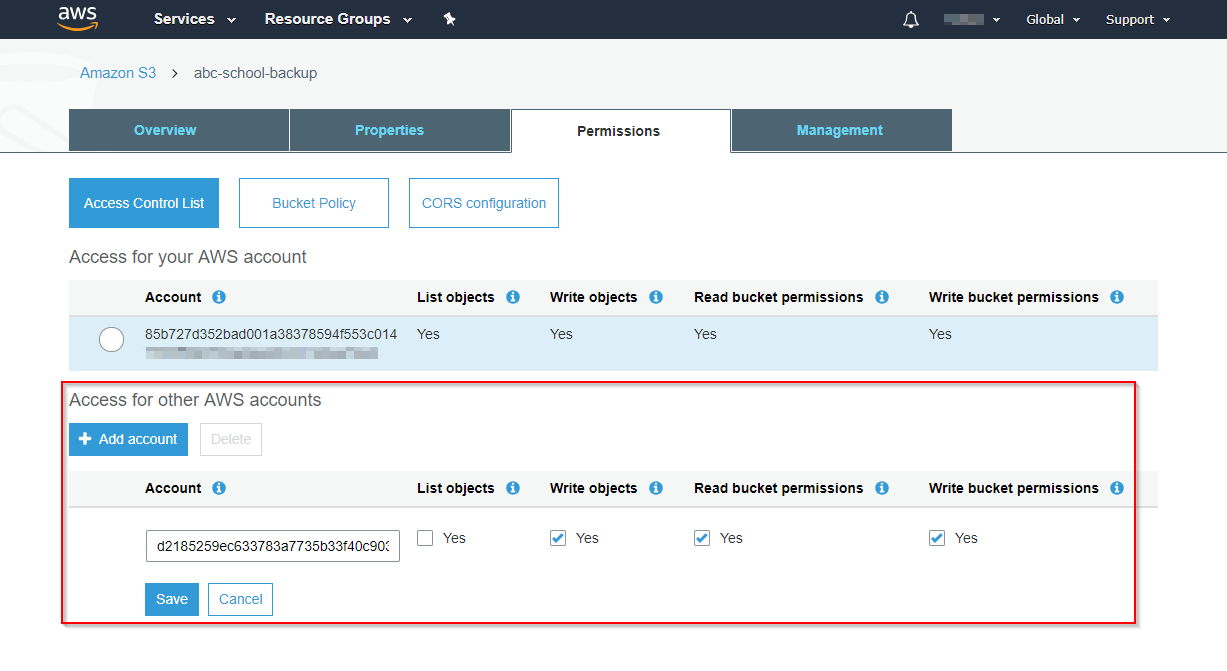

One way to protect against that is to adopt the habit of narrowly-scoped policies, that grant permissions on a single bucket.Ĭonfiguration management also becomes easier: with a shared bucket, everything that touches that bucket - from IAM policies, to S3 life-cycle policies, to application code - has to be configured with both a bucket name and a prefix.

#PERMISSIONS FOR A S3 IMAGE BUCKET ARCHIVE#

This assumes, of course, that those too-broad privileges don’t also allow access to the archive bucket. However, since files are removed from the staging bucket after they’re processed, at any point in time that bucket should have few or no files in it. Clients require privileges to upload files if you accidentally grant too broad a scope, the files in the staging bucket might be compromised. You might also question the point of the archive bucket entirely: once the file has been processed, why keep it? I think there are several answers to this question, each from a different perspective.įirst, as always, security: two buckets minimize blast radius. You can configure an S3 trigger to fire when a file is uploaded with a specific prefix, and could move the file to a different prefix after processing, so could keep everything within a single bucket. The client uploads a file to the first (“staging”) bucket, which triggers the Lambda after processing the file, the Lambda moves it into the second (“archive”) bucket.įrom a strictly technical perspective, there’s no need to have two buckets. ArchitectureĪs the title says, the architecture uses two buckets and a Lambda function. This post is a “deep dive” on the standard tutorial, looking at architectural decisions and operational concerns in addition to the simple mechanics of triggering a Lambda from an S3 upload. Nor do they look at how the files get into S3 in the first place, which is a key part of any application design.

#PERMISSIONS FOR A S3 IMAGE BUCKET HOW TO#

But the tutorials that I’ve seen only look at the “happy path”: they don’t explore what happens (and how to recover) when things go wrong. As a tutorial, it can be implemented in under 15 minutes with canned code, and is something that a lot of people find useful in real life. Triggering a Lambda by uploading a file to S3 is one of the introductory examples of the service.

0 kommentar(er)

0 kommentar(er)